The advent of Chat GPT has completely redefined how AI has been defined. It has taken the digital world by storm and it simultaneously made people question the legal framework surrounding it. It has no doubt eased the work of a lot of professions, from writers to coders. But it has set off a lot of questions in everyone’s minds, is it ethical to not let the person know that what they are reading/seeing might be AI-generated? The answer to this was AI detection tools, it was a Darwinian response which was desperately needed, but the question is do they work?

AI-generated content is essentially not bad and cases can be built for why it is bad. Academic papers are meant to be written after detailed study and they will take a long time. It will be beneficial to know if you are seeing something that has been written by a human or AI. AI Detection tools on the other hand have presented themselves as a panacea in these turbulent times, but do they work? My experience and a lot of them have a different story to tell.

AI Detection is completely different from plagiarism detection. In plagiarism detection, you are shown how much of a literary work is plagiarized in a percentage fashion, but the AI detection tools I used have shown the probability of how much your work “MIGHT BE” written by AI, which implies it doesn’t show how much of it has been written by AI, but how much your entire work might be AI Assisted. So far, I was ok with this methodology. But, this creates a whole new set of problems.

If it shows your article was a 25% probability of being AI-generated, even though you wrote it organically by reproducing it from your mind, which means the detector showed your work has a chance of it being written by an AI assistant. I gave some prompts and these are some of the responses I got.

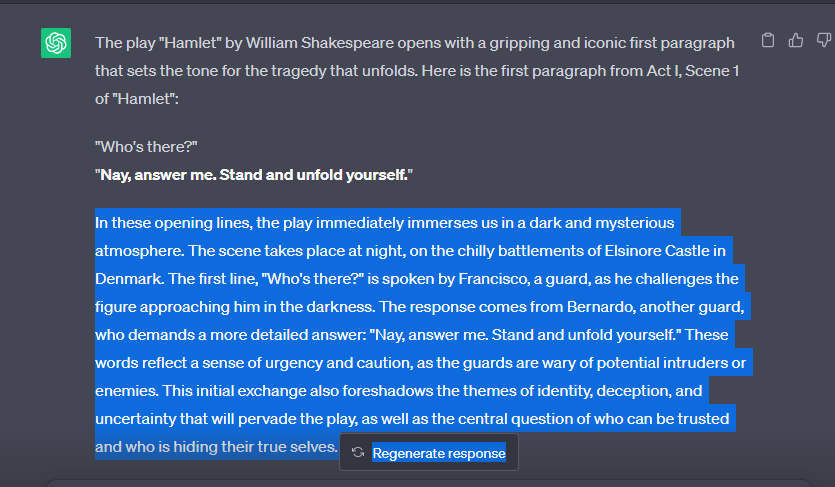

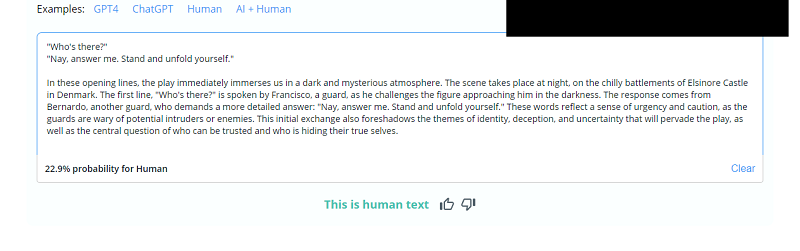

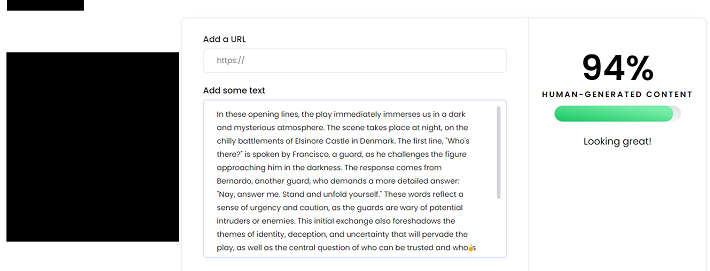

Here I prompted it to write about Hamlet’s opening line and I fed this response to an AI Detector I found on Google and this was the answer I got.

It showed it as 94% Human-generated content, even though as you can see I copied it directly from ChatGPT. Other tools I tried were no different. It is easy to bypass the detection with slightly complicated prompts.

I played around with other prompts and those too were plagued by inaccuracies.

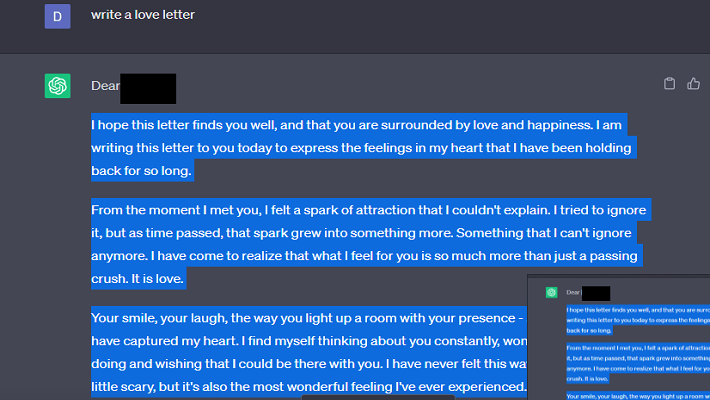

Here I asked chat GPT to write a simple love letter and the response I expected was totally different,

It left me really impressed with its way with words, I tried running it through the AI Detector tool and this was the response I got.

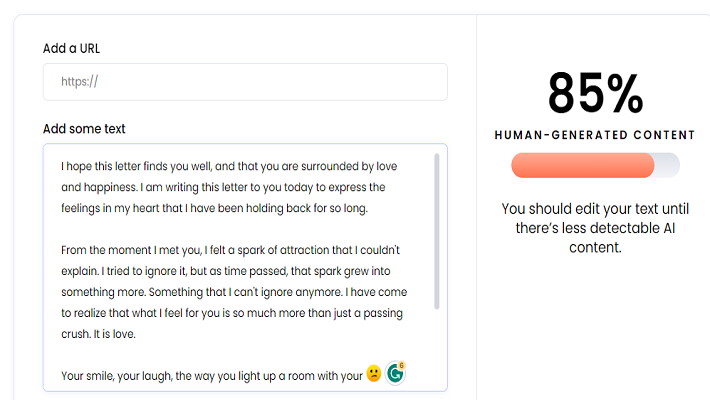

Here it showed my copy-pasted love letter as 85% human written, which is totally false. The problem here is not it flagging AI written work as human written, But vice versa is also possible. I ran a lot of my works before Chat GPT came out in November, and it showed a lot of those as AI generated which was appalling.

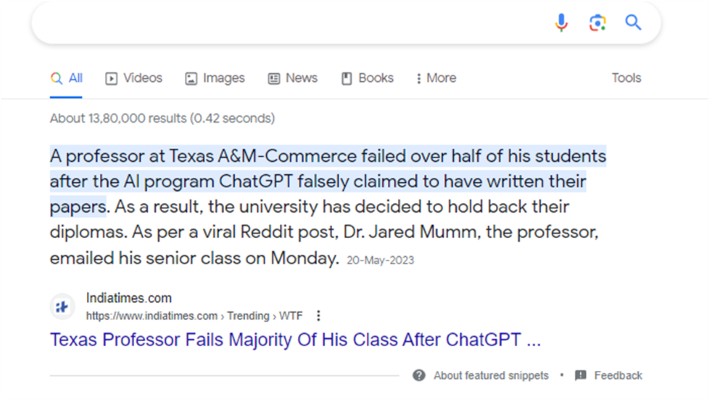

In academic settings where students are graded and these tools are used, A false alarm can lead to devastating results such as this.

While we do not know the entire story, they may or may not have used Chat GPT. But it still creates a fear, half based measures will jeopardize the future of students.

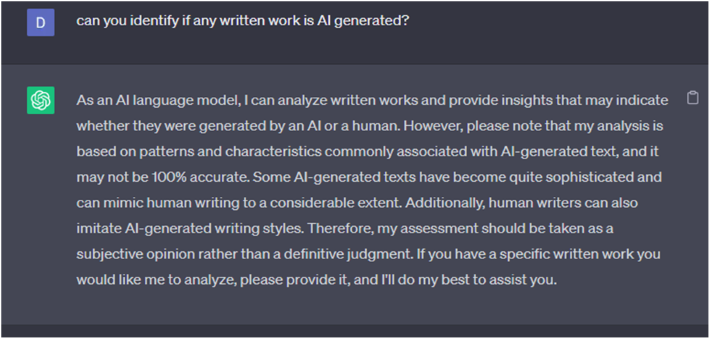

The concept of asking an AI generator if it can detect anything written by AI is totally unfathomable, but this was the response I got.

It clearly tells its word should be taken with a grain of salt. LLMs are trained on human data, Chat GPT has been fed with data from a lot of works which were written by actual humans, and it reproduces them to the appropriate prompts to which it is given.

Personally, whatever AI detection tools give should be taken with a massive pinch of salt. I am certain with the upcoming iterations of chat GPT and other AI assistants, it will be more human-like and will be harder to detect if it is AI written, this concept is relatively new and there are no solutions to it.